A new benchmark, HELM Arabic, developed by Stanford University’s Center for Research on Foundation Models (CRFM) in collaboration with Arabic AI, is bringing greater transparency to the evaluation of large language models (LLMs) for the Arabic language. This initiative addresses a critical gap: despite being spoken by over 400 million people, Arabic has historically been underserved by robust AI assessment tools – leaving the field lagging behind English and other major languages.

Closing the Evaluation Gap

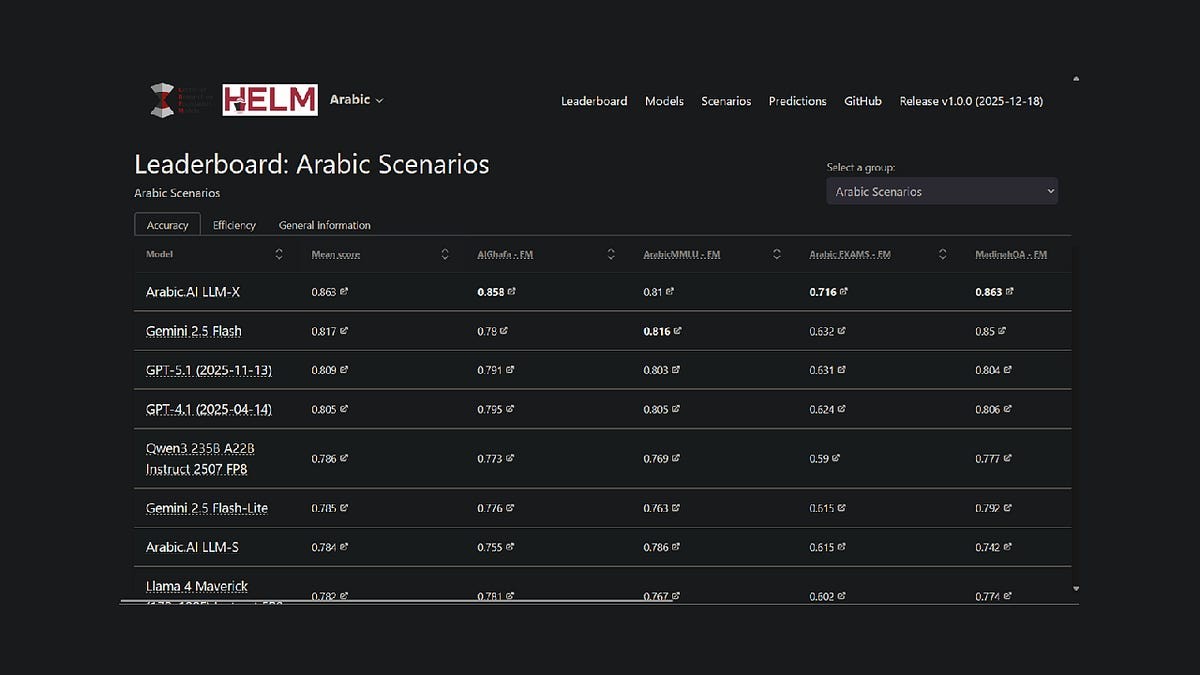

The project extends Stanford’s existing HELM framework, an open-source platform for assessing foundation model capabilities, into the Arabic language. This means researchers and developers now have a publicly accessible, reproducible method for comparing model performance. Currently, Arabic.AI’s LLM-X model (also known as Pronoia) leads across seven key benchmarks: AlGhafa, EXAMS, MadinahQA, AraTrust, ALRAGE, and Translated MMLU.

Performance Highlights

While Arabic.AI’s model currently tops the leaderboard, open-weights multilingual models are also performing well. Notably, Qwen3 235B ranks as the highest-performing open-weights model with a mean score of 0.786. Older Arabic-centric models, such as AceGPT-v2 and JAIS, underperformed in comparison, however, it is important to note that many of these models are over a year old, with the most recent releases dating back to October 2024.

Benchmarking Methodology

HELM Arabic utilizes seven established benchmarks widely used in the research community, including tests for multiple-choice reasoning, exam performance, grammar, safety, and question answering. The methodology includes using Arabic letters in multiple-choice options, zero-shot prompting, and random sampling to ensure balanced evaluation.

Broader Trends in Arabic AI Evaluation

This leaderboard is part of a larger push to improve Arabic AI assessment. Abu Dhabi has been central to this effort, with institutions like the Technology Innovation Institute releasing 3LM in August 2025 (a benchmark for STEM and code generation) and Inception/MBZUAI launching the Arabic Leaderboards Space on Hugging Face. These developments signal a growing commitment to rigorous evaluation in the Arabic language AI space.

The new benchmark is one of a number of initiatives aiming to close the gap in AI evaluation infrastructure for Arabic LLMs. By providing transparent, reproducible evaluation methodology comparable to frameworks used for English and other major languages, HELM Arabic enables objective comparison of model performance.

The HELM Arabic leaderboard is now available as a resource for the Arabic natural language processing community, offering full transparency into model requests and responses.